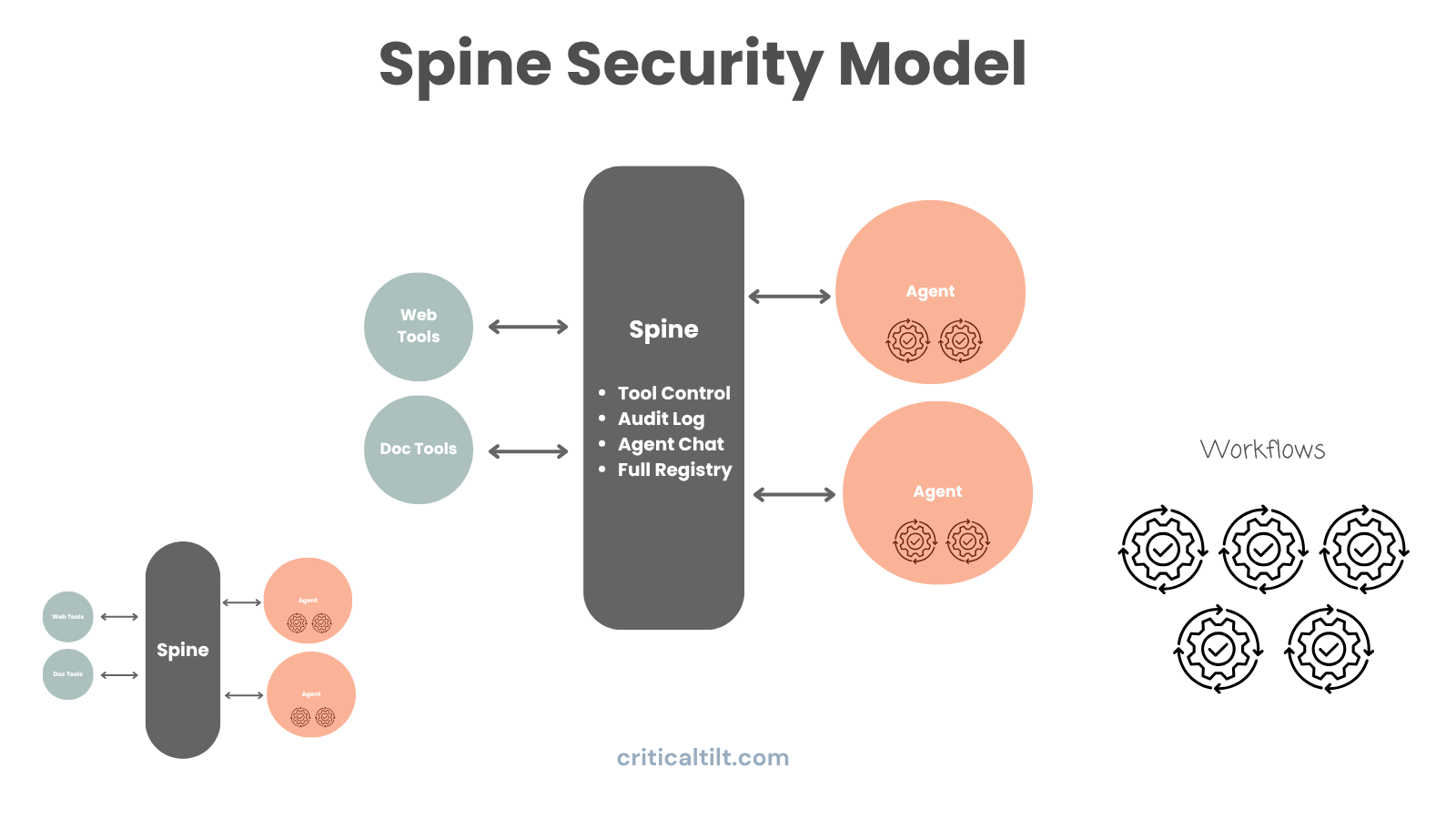

This is a production Python framework for building coordinated AI agent systems. Think of it like a nervous system for AI agents. Instead of having individual agents running in isolation, they attach to a central "spine" that handles resource sharing, security, tool access, and workflow orchestration.

The framework lets you build complex multi-agent applications where different AI agents work together on tasks that would be impractical or impossible for a single agent. For example, analyzing complex procurement documents while simultaneously researching vendors, then mapping compliance requirements and generating evaluation reports. Multiple specialized agents coordinate through the spine to handle workflows that single-agent systems can't manage effectively.

Why It Matters to Your Operations

If your organization deals with complex document processing, multi-step workflows, or needs AI systems that can handle tasks requiring multiple specialized capabilities working together, you probably know the current solutions are limited. Most AI implementations are single-agent systems that can't coordinate effectively or scale beyond basic use cases.

This framework solves several expensive problems:

Labor reduction at scale. Multi-agent workflows can process documents that typically take 8-12 hours of expert review time and compress them to 15-20 minutes of compute time. For organizations processing multiple documents per month, this compounds fast. Implementation partners report 80-90% time savings on document analysis and evaluation workflows.

Better output quality through specialization. Instead of asking one AI agent to do everything (which produces mediocre results), different agents handle specific parts of the workflow where they excel. Document parsing agents handle text extraction, research agents gather background intelligence, analysis agents perform deep semantic matching. Each agent has the right tools and prompts for its specific job. The result is more accurate, more consistent, and actually useful for decision-making.

Cash flow benefits from faster processing. Evaluations that take days instead of weeks mean faster decisions, shorter cycles, and earlier project starts. For organizations bidding on contracts or processing applications, this can significantly improve operational efficiency and competitiveness.

Audit trails that actually work. Every agent action, tool execution, and data flow gets logged to PostgreSQL with full security context. When regulators or auditors ask "how did your system arrive at this determination," you have complete forensics. This matters for government agencies, financial services, healthcare, or anyone dealing with compliance frameworks.

Reduced error rates in complex workflows. The graph-based orchestration system ensures steps happen in the right order with proper validation between stages. Parallel execution where it makes sense (researching multiple entities simultaneously), sequential execution where dependencies exist (extraction must complete before analysis). The system enforces these constraints automatically.

Core Framework Features

Enterprise-Grade Prompt Management System

The framework includes a sophisticated prompt management system that goes well beyond simple string templates. Prompts are defined in YAML files with full template engine support including variables, conditionals, loops, and nested data structures.

Template Engine Features:

- Variable substitution with Handlebars-like syntax:

{{variable_name}} - Conditional rendering:

{{#if priority == "critical"}}urgent content{{/if}} - Loops with index access:

{{#each items}}{{@index}}. {{this}}{{/each}} - Nested loops for complex data structures

- Array length access:

{{items.length}} - Object property access in loops

Input/Output Schema Validation:

Every prompt can specify input and output schemas with type enforcement, required field validation, enum constraints, and custom validators. The system automatically validates data before rendering prompts and after parsing LLM responses.

Supported schema types:

- Primitive types: string, integer, float, boolean

- Complex types: list, dict, enum

- Nested structures with validation at every level

- Custom validators: min_length, max_length, range, regex patterns

Example schema validation:

fields:

priority:

type: enum

required: true

values: ["critical", "high", "medium", "low"]

validators:

- type: regex

value: "^(critical|high|medium|low)$"

requirements:

type: list

item_type: string

required: true

validators:

- type: min_length

value: 1

message: "Must have at least one requirement"

Output Parsing with Error Recovery:

The output parser handles malformed LLM responses with extensive error correction:

- Extracts JSON from markdown code blocks

- Fixes unquoted keys

- Converts single quotes to double quotes

- Removes trailing commas

- Handles Python booleans (True/False) vs JSON (true/false)

This robustness means your workflows don't fail because an LLM returned slightly malformed JSON.

Prompt Versioning and Testing:

Each prompt can include test cases that validate rendering with different inputs. The system tracks prompt versions in PostgreSQL, allowing rollback if a new prompt version causes issues. Audit logs capture every prompt execution with full context.

Database-Backed Prompt Storage:

Prompts can be stored in PostgreSQL instead of YAML files, enabling:

- Runtime prompt updates without code deployment

- A/B testing of different prompt versions

- Analytics on prompt effectiveness

- Collaborative prompt development with version control

Multi-Provider LLM Client System

The framework provides a unified interface for multiple LLM providers with intelligent switching, cost optimization, and provider-specific features.

Supported Providers:

- OpenAI (ChatGPT): GPT-4, GPT-3.5-turbo with full chat completion API

- Anthropic (Claude): Claude 3 Opus, Sonnet, Haiku with extended context

- Google (Gemini): Gemini Pro with multimodal capabilities

- Grok (xAI): Grok models with real-time data access

- NVIDIA NIM: Enterprise-optimized models with guaranteed latency

Provider Switching Strategies:

You can configure different providers for different tasks:

- Use expensive, high-quality models (GPT-4, Claude Opus) for critical analysis

- Use cheaper models (GPT-3.5-turbo, Claude Haiku) for simple transformations

- Fall back to alternative providers if primary is unavailable

- Route based on requirements (context length, multimodal support, cost)

Example multi-provider configuration:

# High-quality analysis agent

analysis_llm = create_llm("anthropic", model="claude-3-opus-20240229")# Fast document processing agent

parsing_llm = create_llm("chatgpt", model="gpt-3.5-turbo")# Cost-optimized bulk operations

bulk_llm = create_llm("nvidia", model="llama-3.1-nemotron-70b-instruct")

LLM Response Handling:

The client provides structured response objects with:

- Content (text response)

- Token usage (prompt tokens, completion tokens, total)

- Cost calculation (based on provider pricing)

- Latency metrics

- Model metadata

- Finish reason (completed, length, content_filter, etc.)

Streaming Support:

All providers support streaming responses for real-time output:

async for chunk in llm.chat_stream(messages):

print(chunk.content, end='', flush=True)

This enables responsive UIs where users see results as they generate, not just when complete.

Automatic Retry Logic:

Built-in retry with exponential backoff for transient errors:

- Rate limit exceeded → wait and retry with increasing delays

- Network errors → retry up to configured max attempts

- Model overload → automatically fall back to alternative model

- API key issues → fail fast with clear error message

Spine-Based Security Architecture

Security is built into the architecture, not bolted on. The spine acts as a security boundary where all agent operations are validated against granted permissions.

Human-in-the-Loop Approval Workflow:

When an agent requests attachment to a spine:

- Request is logged to database with requester context

- Human approver receives notification (email, Slack, dashboard)

- Approver reviews: What does this agent do? What tools does it need? What data will it access?

- Approval grants specific permissions (read memory, write memory, execute tools, attach sub-agents)

- Agent receives security context with granted capabilities

- All subsequent operations validate against this context

Permission-Based Access Control:

The security context flows through every operation:

- Tool execution checks if agent has

execute_toolspermission - Memory operations check if agent has

read_memoryorwrite_memorypermission - Sub-agent spawning checks if agent has

attach_agentpermission - Database writes check if agent has

persist_datapermission

Security Context Inheritance:

When an agent spawns a sub-agent:

- Sub-agent inherits a subset of parent's permissions (never more)

- Security context tracks parent-child relationships

- Audit logs show the full permission chain

- Revoked parent permissions automatically cascade to children

Tool-Level Security:

The spine controls which tools agents can access:

- Tools are registered in a global registry

- Spines are granted permission to specific tools

- Agents attached to the spine can only use tools the spine has permission for

- Every tool execution logs: agent ID, spine ID, tool ID, operation, parameters, result

This multi-layered security prevents agents from accessing capabilities they shouldn't have.

Temporal Security:

Security contexts can have expiration times:

- Temporary agents get short-lived permissions (1 hour, 8 hours, etc.)

- Long-running workflows have security contexts that don't expire

- Expired contexts automatically reject all operations

- System automatically cleans up expired temporary agents

Comprehensive Audit Logging and Observability

Every operation in the framework generates audit logs with complete context. This isn't just logging for debugging. It's forensic-quality audit trails for compliance, security analysis, and system optimization.

What Gets Logged:

Agent Operations:

- Agent creation, initialization, execution, cleanup

- Agent attachment/detachment from spines

- State transitions and errors

- Parent-child agent relationships

Tool Executions:

- Tool ID, operation name, parameters

- Execution time, success/failure

- Agent and spine context

- Security validation results

LLM Interactions:

- Prompt text (full or hash for sensitive content)

- Model used, token counts, costs

- Response content (configurable retention)

- Latency metrics

- Error conditions

Workflow Executions:

- Graph execution start/complete

- Node execution with timing

- Edge transitions

- State at each step

- Parallel execution coordination

Security Events:

- Attachment requests (approved/denied)

- Permission grants/revocations

- Failed permission checks

- Security context expirations

Audit Log Structure:

Each audit entry includes:

- Timestamp (microsecond precision)

- Operation type (agent_execute, tool_execution, llm_call, etc.)

- Agent ID, spine ID (if applicable)

- Security context ID

- Input data (parameters, arguments)

- Output data (results, responses)

- Execution time (milliseconds)

- Success/failure status

- Error details (if failed)

- Correlation ID (for tracing related operations)

Audit Log Storage:

Logs can be written to:

- Local JSON files (development, small deployments)

- PostgreSQL (production, queryable)

- Elasticsearch (high-volume, full-text search)

- Cloud logging services (CloudWatch, Stackdriver)

Audit Log Querying:

The framework provides rich querying capabilities:

# Get all failed LLM calls in the last hour

failed_calls = await audit.query(

operation_type="llm_call",

success=False,

time_range={"last": "1h"}

)# Trace all operations for a specific workflow

workflow_trace = await audit.trace(

correlation_id="workflow_analysis_abc123"

)# Cost analysis by agent

agent_costs = await audit.aggregate(

metric="llm_cost",

group_by="agent_id",

time_range={"last": "30d"}

)

Observability Dashboards:

The audit system supports real-time monitoring:

- Active agents and their current states

- Tool usage patterns and error rates

- LLM costs by provider, model, agent

- Workflow execution times and bottlenecks

- Security events and anomalies

Graph-Based Workflow Orchestration

Workflows are defined as directed graphs where nodes are agent operations and edges define data flow and execution order. This provides flexibility that rigid pipelines can't match.

Workflow Patterns:

Sequential Pipeline:

graph.add_node("parse", parse_document)

graph.add_node("extract", extract_requirements)

graph.add_node("analyze", analyze_compliance)

graph.pipeline("parse", "extract", "analyze")

Parallel Execution:

graph.add_node("research_entity_a", research_first_entity)

graph.add_node("research_entity_b", research_second_entity)

graph.parallel("research_entity_a", "research_entity_b")

Conditional Branching:

graph.add_node("check_quality", quality_check)

graph.add_node("manual_review", send_to_human)

graph.add_node("auto_process", automatic_processing)

graph.conditional(

from_node="check_quality",

condition=lambda state: state['quality_score'] < 0.8,

true_node="manual_review",

false_node="auto_process"

)

Dynamic Routing:

# Route to different nodes based on document type

graph.add_dynamic_router(

from_node="classify_document",

router_func=lambda state: {

'pdf': 'parse_pdf',

'word': 'parse_word',

'excel': 'parse_excel'

}[state['document_type']]

)

State Management:

The graph maintains state that flows between nodes. Each node receives the current state and returns updated state. The framework handles state persistence, validation, and recovery.

Error Handling and Recovery:

Workflows can specify error handling at the node or graph level:

- Retry failed nodes with backoff

- Fall back to alternative nodes

- Skip optional nodes if they fail

- Roll back state to last checkpoint

- Send notifications for manual intervention

Workflow Resumption:

If a workflow fails or is interrupted:

- State is persisted to database at each node

- Workflow can resume from last successful node

- No need to reprocess completed steps

- Audit log shows full execution history including failures

How Government Agencies Can Use This

Government agencies face unique challenges with document processing, compliance verification, and decision support systems. The framework's security model, audit capabilities, and multi-agent coordination address these specific needs.

Procurement and Contract Analysis

Government procurement generates enormous document volumes: solicitations, proposals, contracts, modifications, performance reports. Processing these manually is slow and error-prone.

Multi-Agent Workflow:

- Document Classification Agent: Identifies document types (solicitation, proposal, contract, amendment, report)

- Parsing Agent: Extracts text, tables, requirements, pricing, delivery schedules

- Compliance Verification Agent: Validates against FAR/DFARS regulations, small business requirements, wage determinations

- Analysis Agent: Performs cost reasonableness checks, past performance evaluation, technical capability assessment

- Risk Assessment Agent: Identifies compliance gaps, delivery risks, cost risks

- Report Generation Agent: Creates evaluation reports, award justifications, file documentation

Benefits:

- Document review time reduced by 80-85%

- Consistent evaluation methodology across proposals

- Complete audit trail for protests and investigations

- Faster awards (better for both agency and vendors)

- Reduced risk of protests due to documentation quality

Grant Application Review and Award Management

Grant programs receive hundreds or thousands of applications. Reviewing for eligibility, technical merit, budget reasonableness, and compliance is resource-intensive.

Multi-Agent Workflow:

- Intake Agent: Validates application completeness, eligibility requirements

- Technical Review Agent: Evaluates project approach, methodology, team qualifications

- Budget Analysis Agent: Reviews budget justification, cost reasonableness, allowability

- Compliance Agent: Checks previous grant performance, audit findings, debarment status

- Comparative Analysis Agent: Scores applications consistently against evaluation criteria

- Recommendation Agent: Generates funding recommendations with supporting rationale

Benefits:

- Application review cycle time reduced from months to weeks

- More applications reviewed with same staff

- Consistent scoring methodology

- Better documentation of funding decisions

- Improved program effectiveness through data analysis

Regulatory Compliance and Enforcement

Agencies must review permit applications, inspection reports, compliance filings, and enforcement actions. Manual review creates backlogs and inconsistent enforcement.

Multi-Agent Workflow:

- Document Processing Agent: Ingests filings, inspection reports, permit applications

- Regulation Mapping Agent: Identifies applicable regulations, requirements, thresholds

- Compliance Checking Agent: Validates against technical standards, reporting requirements

- Anomaly Detection Agent: Flags unusual patterns, potential violations

- Risk Prioritization Agent: Scores entities by compliance risk, prioritizes inspections

- Enforcement Recommendation Agent: Suggests appropriate actions (warning, fine, referral)

Benefits:

- Faster permit approvals (better constituent service)

- More effective targeting of enforcement resources

- Consistent enforcement decisions

- Complete documentation for legal proceedings

- Trend analysis to identify systemic issues

FOIA Request Processing

Freedom of Information Act requests require searching documents, determining releasability, applying exemptions, and tracking through completion. Processing backlogs are common.

Multi-Agent Workflow:

- Request Intake Agent: Classifies request, determines scope, identifies relevant record systems

- Document Search Agent: Queries databases, file systems, email archives in parallel

- Relevance Filtering Agent: Identifies responsive documents, removes duplicates

- Exemption Analysis Agent: Flags content requiring redaction (privacy, classified, deliberative, etc.)

- Redaction Agent: Proposes redactions with legal justification

- Review Package Agent: Generates review package for human approval

Benefits:

- Faster response times (better customer service, fewer lawsuits)

- More consistent exemption determinations

- Complete audit trail of search and review

- Reduced litigation risk through better documentation

- Staff can focus on complex determinations, not searching

Casework and Benefits Adjudication

Agencies process applications for benefits, licenses, permits, clearances. Each requires document verification, eligibility determination, and decision documentation.

Multi-Agent Workflow:

- Application Intake Agent: Validates completeness, initiates background checks

- Document Verification Agent: Confirms identity documents, credentials, supporting evidence

- Eligibility Agent: Checks criteria against databases (income, residence, work history, etc.)

- Risk Assessment Agent: Identifies fraud indicators, inconsistencies

- Decision Support Agent: Recommends approval/denial with supporting evidence

- Notification Agent: Generates decision letters with appeal rights

Benefits:

- Faster case processing (better service, reduced backlogs)

- Consistent application of eligibility criteria

- Reduced fraud through better anomaly detection

- Complete case documentation for appeals

- Staff can focus on edge cases requiring judgment

Incident and Investigation Management

Agencies investigate complaints, safety incidents, fraud allegations, and violations. Investigations require document review, witness interview analysis, evidence compilation.

Multi-Agent Workflow:

- Intake Agent: Classifies complaint, determines jurisdiction, initiates case file

- Evidence Collection Agent: Gathers documents, statements, reports from multiple sources

- Timeline Construction Agent: Creates event timeline from multiple sources

- Analysis Agent: Identifies patterns, inconsistencies, corroborating evidence

- Legal Review Agent: Evaluates against regulatory or statutory requirements

- Report Generation Agent: Creates investigation report with findings and recommendations

Benefits:

- Faster investigations (better deterrence, quicker justice)

- More thorough evidence review

- Consistent analysis methodology

- Complete case documentation

- Better resource allocation to high-priority cases

How Manufacturers Can Use This

Quality Control and Inspection Report Analysis

Manufacturers generate inspection reports, quality control data, supplier certifications, and non-conformance records. Analyzing these manually is slow and inconsistent.

Multi-Agent Workflow:

- Document Processing Agent: Parses inspection reports (PDFs, images with OCR, structured data files)

- Data Extraction Agent: Pulls measurements, pass/fail results, inspector notes, timestamps

- Compliance Checking Agent: Validates against specifications, tolerances, industry standards (ISO 9001, AS9100, etc.)

- Trend Analysis Agent: Identifies patterns across batches, shifts, suppliers

- Risk Assessment Agent: Flags potential quality issues before they become expensive failures

- Reporting Agent: Generates executive summaries, compliance certificates, action items

Business Impact:

- Inspection report analysis reduced from 2 hours to 5 minutes

- Catch quality trends earlier (detecting drift before out-of-spec parts ship)

- Automated compliance documentation for customer audits

- Faster root cause analysis when defects occur

- Better supplier performance tracking

Bill of Materials (BOM) Verification and Sourcing

Complex manufactured products have BOMs with thousands of parts. Verifying part availability, pricing, lead times, and alternatives across suppliers is tedious manual work.

Multi-Agent Workflow:

- BOM Parser Agent: Extracts part numbers, quantities, specifications from engineering documents

- Supplier Query Agent: Searches multiple supplier APIs in parallel (Digi-Key, Mouser, McMaster-Carr, Alibaba)

- Price Analysis Agent: Compares pricing across suppliers, accounts for volume discounts, identifies cost optimization opportunities

- Lead Time Agent: Checks inventory levels and lead times, flags long-lead items

- Alternative Parts Agent: Suggests equivalent parts from alternative suppliers, validated against specifications

- Risk Assessment Agent: Identifies single-source parts, supplier concentration risks, geopolitical supply chain issues

Business Impact:

- BOM verification reduced from days to hours

- 10-15% cost savings through better supplier selection and volume consolidation

- Earlier identification of supply chain risks

- Automated alerts when long-lead parts threaten schedule

- Better negotiating position with data on alternative suppliers

Production Line Optimization and Downtime Analysis

Manufacturers collect massive amounts of machine data, downtime logs, maintenance records, and production metrics. Extracting actionable insights requires specialized analysis that most organizations do manually or ignore.

Multi-Agent Workflow:

- Data Collection Agent: Ingests machine logs, SCADA data, maintenance tickets, shift reports

- Anomaly Detection Agent: Identifies unusual patterns in machine performance, cycle times, reject rates

- Correlation Analysis Agent: Finds relationships between maintenance events, operator shifts, environmental conditions, and production issues

- Predictive Maintenance Agent: Models failure patterns and recommends proactive maintenance

- Root Cause Agent: Analyzes downtime events to identify underlying causes (machine issues vs. material quality vs. operator training)

- Optimization Agent: Recommends schedule changes, setup adjustments, tooling replacements to maximize throughput

Business Impact:

- Downtime reduced by 20-30% through predictive maintenance

- OEE (Overall Equipment Effectiveness) improvement of 5-10 percentage points

- Faster root cause analysis (hours instead of weeks)

- Maintenance budget optimization (fix what needs fixing, not calendar-based PM)

- Better capital investment decisions (data showing which machines limit capacity)

Regulatory Compliance and Documentation

Manufacturers in regulated industries (medical devices, aerospace, automotive) must maintain extensive documentation and demonstrate compliance with FDA, FAA, ISO, ITAR, and other regulatory frameworks.

Multi-Agent Workflow:

- Document Classification Agent: Categorizes documents by type (DMR, DHF, DHR, design controls, risk analysis, etc.)

- Completeness Checking Agent: Verifies all required documents exist for regulatory submissions

- Cross-Reference Agent: Validates that specifications match across engineering drawings, process documents, test protocols

- Audit Trail Agent: Tracks document changes, approvals, version history

- Gap Analysis Agent: Identifies missing documentation or non-compliances before audits

- Report Generation Agent: Creates regulatory submission packages, audit responses, CAPA documentation

Business Impact:

- Pre-submission review time reduced from weeks to days

- Fewer FDA observations or FAA findings (better compliance before audit)

- Faster CAPA (Corrective and Preventive Action) response

- Reduced risk of warning letters or manufacturing holds

- Lower consulting costs for regulatory submissions

Engineering Change Order (ECO) Impact Analysis

When engineering makes a design change, manufacturing needs to assess impacts on existing production, inventory, suppliers, and customer deliveries. This analysis typically involves multiple departments and takes days.

Multi-Agent Workflow:

- Change Parsing Agent: Extracts affected part numbers, specifications, revision levels from ECO documents

- BOM Impact Agent: Identifies where changed parts are used (parent assemblies, finished goods)

- Inventory Analysis Agent: Checks existing stock of obsolete parts, raw materials, work-in-progress

- Customer Impact Agent: Determines which customer orders are affected, contract implications

- Cost Analysis Agent: Calculates scrap costs, rework costs, expediting fees, inventory write-downs

- Schedule Impact Agent: Models timeline for change implementation, identifies critical path impacts

- Supplier Notification Agent: Generates purchase order changes, supplier notifications

Business Impact:

- ECO impact analysis time reduced from 5 days to 4 hours

- Better decisions on timing (implement now vs. wait for natural inventory depletion)

- Fewer customer surprises (proactive notification of schedule impacts)

- Reduced scrap and obsolescence costs

- Faster new product introduction (ECO process doesn't become bottleneck)

Customer RFQ (Request for Quote) Response

Manufacturers receive RFQs that require quick turnaround (24-48 hours). Creating accurate quotes involves reviewing specifications, checking capacity, estimating costs, assessing risks.

Multi-Agent Workflow:

- RFQ Parser Agent: Extracts requirements, quantities, delivery dates, special terms

- Feasibility Analysis Agent: Checks manufacturing capabilities, tooling requirements, material availability

- Capacity Planning Agent: Models production schedule to determine realistic delivery dates

- Cost Estimation Agent: Calculates material costs, labor hours, overhead, margin

- Risk Assessment Agent: Flags technical risks, supply chain constraints, new processes required

- Competitive Analysis Agent: Researches customer's industry, typical margins, likely competition

- Quote Generation Agent: Creates formal quote documents with terms and conditions

Business Impact:

- Quote turnaround time reduced from 3 days to 8 hours

- Higher win rate (faster response wins more business)

- More accurate quotes (fewer money-losing jobs from estimation errors)

- Better prioritization (spend more time on high-value opportunities)

- Improved margin management (data-driven pricing instead of guesswork)

Technical Architecture

The system is async-first (built on asyncio) with connection pooling, transaction management, and proper resource cleanup. Type hints throughout for IDE support and maintainability. Comprehensive error handling with retry logic, exponential backoff, and graceful degradation.

Database schema uses proper normalization with foreign key relationships, cascading deletes where appropriate, JSON columns for flexible metadata, and indexes on common query patterns. Migrations are version-controlled and support both schema evolution and data transformations.

The security model uses context-based permissions that flow through the entire call chain. When an agent executes a tool, the security context includes the agent's identity, the spine it's attached to, granted permissions, and optional parent agent context for sub-agent operations. The tool validates this context before execution, and violations are logged with full details.

What This Doesn't Do

This is infrastructure, not a complete application. You'll need to write your agents, define your workflows, configure your tools, and set up your database. The framework provides the foundation, but you're responsible for the implementation.

It's not a no-code solution. If you want to build sophisticated multi-agent systems, you're going to write Python. The framework reduces complexity and handles the hard infrastructure problems, but it assumes you have engineering resources.

This isn't optimized for simple single-agent chatbots. If you just need one AI agent answering customer support questions, use something simpler. This framework shines when you need multiple specialized agents working together on complex workflows.

The framework doesn't include pre-built agents for your specific industry or use case. You'll need to build workflows for your specific requirements using the framework's components.

Why I Built This

Most AI agent frameworks are built for demos and hackathons. They fall apart when you try to build production systems that handle real documents, real integrations, real security requirements, and real scale. I needed something that could handle complex multi-document analysis workflows without requiring a complete rewrite when moving from prototype to production.

The spine architecture came from working with organizations that needed multiple AI agents coordinating on shared resources. The security model came from compliance requirements where you need to demonstrate proper access controls and audit trails. The graph orchestration came from workflows that were too complex for rigid pipelines but needed more structure than just "let the agents figure it out."

The prompt management system came from the reality that prompts are code. They need version control, testing, validation, and the ability to update without redeployment. The multi-provider LLM support came from not wanting vendor lock-in and needing to optimize costs by using the right model for each task.

This is the framework I wish existed when I started building multi-agent systems for production use cases. It handles the infrastructure problems (database persistence, security, error recovery, tool management, workflow orchestration) so you can focus on the interesting parts (what your agents do, how they coordinate, what problems they solve).

If you're building AI systems that go beyond simple single-agent interactions and need to demonstrate that they work reliably, securely, and at scale, this framework provides the foundation. The code is production-tested, the architecture is sound, and the examples show you how all the pieces fit together.

For government agencies and manufacturers specifically, this framework addresses the reality that your operations generate massive amounts of data that could drive better decisions if you could actually analyze it. The framework gives you the infrastructure to build AI systems that actually use it.